In this blog, Ruby Gourlay, Fivecast Tradecraft Advisor explores the risks posed by rapidly advancing Artificial Intelligence (AI) and the potential threats of deepfakes to the electoral process. Ruby is an intelligence-trained professional, with qualifications in International Relations and Sociology.

Although Artificial Intelligence (AI) is a recent phenomenon, we are already surrounded by it in our daily lives – consider Siri, facial recognition to unlock our phones, lane-assist features when driving, and Google search capabilities customized to meet our individual interests. With artificial intelligence, previous technological limitations are lessened, and users either gain additional benefits or see substantial improvements to existing tasks, i.e. driving. However, there is one emerging malign application of AI with the potential to impact democracy on a scale previously considered impossible.

In the last decade, AI has been employed to create or deploy “deepfakes,” where imagery, videos, or voices are subtly altered or entirely falsified to push a narrative, demean, or exploit the subject(s) in the material, or manipulate the recipient into doubting authoritative sources, falling for a scam, or supporting a hidden agenda. While manipulated images are not a new phenomenon, the advanced AI utilized to generate deepfake material has seen increasing sophistication in the last five years and, in many cases, can only be detected by professionals leveraging anti-deepfake programs. A casual observer may not be aware that a deepfake is being employed. Politicians can be vilified, elections can be stolen, and unsuspecting relatives may receive calls for help for funds in the voices of their families.

Request our Disinformation Industry Brief

The Rapid Evolution of deepfakes

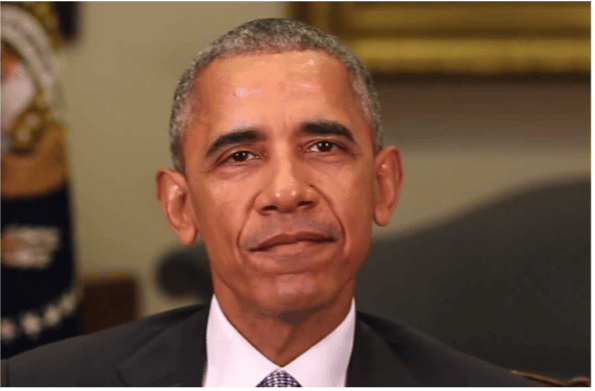

Deepfake AI refers to facial swapping and voice impersonation technology, which is becoming increasingly realistic. With deepfakes, the face or voice of an individual can be digitally modified so that they appear as someone else. Many deepfakes have been created light-heartedly. Notably, in 2018, popular director Jordan Peele orchestrated a deepfake involving former U.S. President Barack Obama to act as a public service announcement. The purpose of the deepfake was to encourage viewers to not always believe what they see on the internet. Peele’s video highlighted a critical threat – deepfakes can be used to maliciously target high-profile individuals, including politicians.

An image of a Barrack Obama deepfake orchestrated by Jordan Peele and Buzzfeed in 2018

An image of a Barrack Obama deepfake orchestrated by Jordan Peele and Buzzfeed in 2018

Concern surrounding the technology has increased recently, particularly due to the profane nature of specific imagery. Whilst seemingly innocent, the danger of deepfakes is becoming increasingly potent. As technology advances, so does the detail of deepfake visuals, audio, and movement. While some deepfakes are created in jest, many are deployed for more sinister purposes. The technology can be utilized to generate celebrity pornography, revenge pornography as well as child exploitation material.

The Threat to Democracy

Regulations and laws surrounding deepfakes are still in their infancy, resulting in a lack of detection and deterrence mechanisms while the technology and the threats it poses race ahead in sophistication and scope. The increase in deepfake creation demands swift and thorough establishment of policy frameworks to address the implications on institutions and individuals.

The widespread dissemination of deepfakes with malicious intent places intense pressure on democratic processes, such as elections. Much like historical disinformation campaigns, deepfakes targeting the political process have real potential to alter electoral outcomes. Voters who fall victim to deepfake disinformation may find that their resulting vote does not align with their true political beliefs, in effect surrendering their vote to the perpetrator of the deepfake.

In recent years, political deepfakes have been deployed frequently to spread disinformation and interfere with critical democratic processes or result in severe emotional or physical harm to targeted individuals. Many political leaders have already found themselves to be victims of deepfake hoaxes:

- During the 2023 presidential election in Türkiye, candidate Kemal Kilicdaroglu accused Russia of deploying deepfake material to influence the electoral process.

- During the 2023 election in Slovakia, a deepfake audio of Progressive leader Michal Simecka emerged, in which the faked audio discussed buying votes and rigging the election.

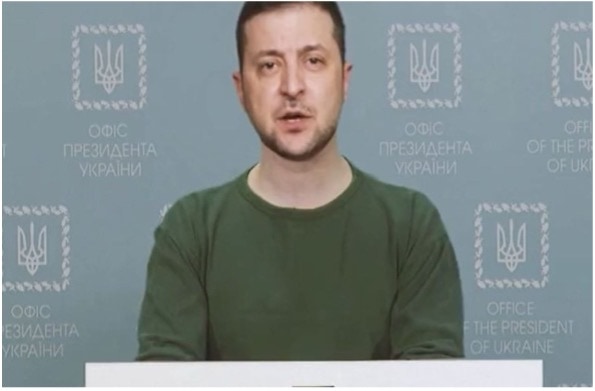

- Volodymyr Zelenskyy fell victim during the height of the Russo-Ukraine war in 2022, in which a deepfake video requested that Ukrainian soldiers ‘lay down their weapons’.

While national legislation is highly recommended for the regulation of deepfake content, monitoring of compromising deepfake activity also relies on individual online platforms creating and enforcing guidelines to protect individuals against malicious deepfake content. Online platforms, such as social media, must develop algorithms that can authenticate materials to protect genuine content. Given the complexity of online platforms, which operate on a transnational basis, users are to be made accountable both by the enforced guidelines and local legislation.

An image of a Volodymyr Zelenskyy deepfake published in 2022.

An image of a Volodymyr Zelenskyy deepfake published in 2022.

Unmasking Deepfakes: A Way Forward

Many perpetrators of deepfakes typically connect with known malicious groups and adversarial nations where the asserted messaging mirrors the nation’s official stance, doctrine, and propaganda. Given this, the rapid advancement of deepfake AI requires national security and law enforcement analysts to continually up-skill to remain knowledgeable of upcoming threats to leaders and institutions. The use of Open-Source Intelligence (OSINT) can assist in the attribution of distributed deepfakes. By using OSINT products, such as Fivecast ONYX, analysts can detect and collect insights into this emerging technology. Fivecast ONYX is equipped with AI-enabled risk analytics, allowing analysts to identify and monitor narratives, sentiment, keywords, and phrases. Through curated risk detectors, analysts can continue to monitor the evolving digital landscape for potential security risks.