In this blog, a Fivecast Tradecraft Advisor explores the evolution of Russian disinformation, analyzing its impact on the war in Ukraine and how disinformation has shaped the online narrative.

What is ‘Disinformation’?

“False information deliberately and often covertly spread (as by the planting of rumors) in order to influence public opinion or obscure the truth” – Merriam-Webster

For Western governments, the challenge faced by Russian disinformation is nothing new, tracing back to the early days of the Soviet Union. In the 1920s, the Soviet government established a special department dedicated to spreading “dezinformatsiya.” This department, known as the “Special Disinformation Office,” was responsible for planting false stories and forged documents in the Western media.

Following the collapse of the Soviet Union and the end of the Cold War, Russia continued to use disinformation. However, the methods used in Russian disinformation campaigns have evolved. In the past, Russian disinformation was often spread through traditional media outlets, such as newspapers and magazines. However, in recent years, Russian disinformation has increasingly spread online through social media and online news platforms.

LEARN MORE:

POst-Cold War Evolution

The objectives of Russian disinformation have not changed since Soviet times. The aim is to influence and manipulate audiences by obfuscating facts, confusing issues, and creating false narratives. Online news and social media platforms have made it easier for Russia to reach larger audiences and amplify disinformation through a network of official or state-sponsored news outlets. In today’s contemporary information environment, observers of modern Russian disinformation note that it is high volume, rapid, and repetitive. It lacks commitment to objective reality and consistency – characterized by the RAND Corporation as “the firehose of falsehood.”

Whether it’s official organizations, representatives, state-sponsored media outlets, or bot (fake) accounts, all these actors form part of an ecosystem of lies operating as a decentralized network of autonomous entities echoing each other. This activity forms part of Russia’s approach to hybrid warfare, a strategic-level effort to shape a target state’s governance and geostrategic orientation in which all actions, up to and including the use of conventional military forces in regional conflicts, are subordinate to an information campaign. The deployment of this disinformation ecosystem and strategy was exemplified in the build-up and subsequent war against Ukraine.

Read our blog on the Adversarial Tactics for Shaping OSINT

The war in Ukraine

Disinformation formed a key facet of Russia’s propaganda efforts to shape the information space in the lead-up to the February 2022 invasion of Ukraine. Disinformation efforts centered around the falsehood that Ukraine was a Nazi regime and Russia had undertaken a “denazification” mission. Other prominent falsehoods to emerge in Russian disinformation included claims Ukraine was committing genocide against Russian speakers in the Donbas region, and Ukraine was utilizing U.S. research labs to develop biological weapons. The biological weapons falsehood was soon picked up and amplified by extreme-right wing conspiracy media such as Infowars and the QAnon conspiracy movement. Subsequently, it ended up being broadcast by political commentator Carlson Tucker to millions of viewers on Fox News, essentially acting as a feedback loop for Russian state propaganda.

Following the invasion, we also witnessed how Russia has manipulated ‘fact-checking’ as a form of disinformation through pseudo fact-checking websites and channels such as “War on Fakes”. War on Fakes purports to be a fact-checking service. However, its “fact-checks” claiming to debunk non-existent examples of Ukrainian disinformation are in fact strands of Russian disinformation in disguise which it is argued add to the ‘noise’. This is designed to overwhelm the audience and ultimately make them suspicious of official information.

Influencers profiting from propaganda

A recent investigation by BBC News indicates that so called “Z-bloggers”, Russia’s pro-war social media influencers, have taken advantage of a surge of advertising revenue – monetizing the dissemination of Kremlin propaganda. The BBC discovered that some of the most prominent “Z-bloggers”, behind popular Telegram channels such as WarGonzo and Grey Zone, quoted hundreds of pounds per advertising space on their Telegram channels.

This comes amongst criticism that Western social media companies have not done enough to curb Russian disinformation. A recent report by the EU Commission concluded that “Over the course of 2022, the audience and reach of Kremlin-aligned social media accounts increased substantially all over Europe,”

Russian disinformation in the aftermath of the central Kramatorsk strike

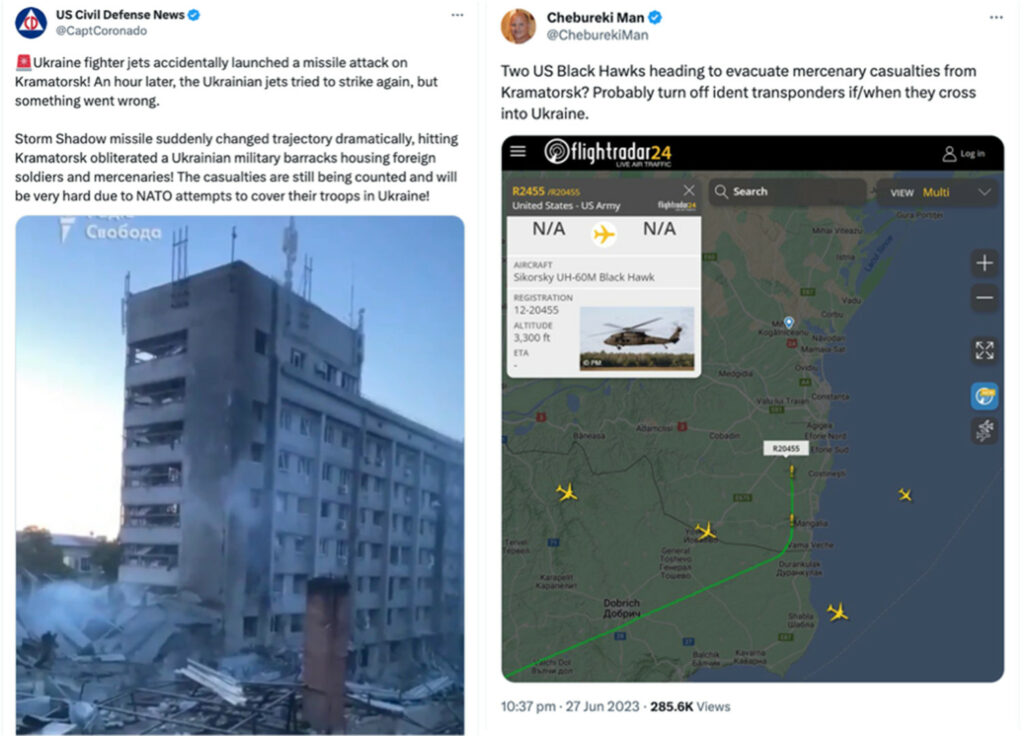

A recent investigation by the non-profit Centre for Information Resilience (CIR) outlined how disinformation narratives spread on social media following the 27 June 2023 Russian missile strike on a central Kramatorsk restaurant and hotel complex, killing several civilians. CIR identified two seemingly contradictory disinformation narratives – that this was a Russian attack on Ukrainian and NATO soldiers, and an accidental misfire by a Ukrainian-launched British-made Storm Shadow missile. CIR discovered the narratives were fused by right-wing conspiracy theorists in the U.S. and subsequently shared with millions on social media – providing the original Russian disinformation with significant traction internationally, that it wouldn’t have ordinarily achieved.

Examples of false narratives in Russian disinformation amplified by right-wing conspiracy theorists following the 27 June 2023 Kramatorsk missile strike.

The Value of OSINT

Open-source Intelligence (OSINT) plays an important role in identifying and monitoring Russian disinformation campaigns. By its nature, disinformation is publicly available and intended to be disseminated as widely as possible, where people communicate, get their information, and engage with new ideas. This is increasingly happening online and on social media platforms. Without OSINT and Social Media Intelligence (SOCMINT), finding the disinformation campaigns themselves would be incredibly difficult. Once a possible disinformation campaign has been identified, OSINT aids in the assessment of engagement and spread, and understanding of possible aims of the disinformation. OSINT might also assist with attributing the source of disinformation campaigns, especially if it can be fused with other sources, like Human Intelligence (HUMINT) and Signals Intelligence (SIGINT).

Therefore, OSINT tools that enable broad and targeted data collection and risk analysis can be valuable for identifying and analyzing disinformation campaigns. Fivecast ONYX provides users with unparalleled access to the information environments through which disinformation is spread- enabling efficient and effective identification and assessment of key narratives, information campaigns, and especially disinformation. Fivecast ONYX layers data collection capability with AI-enabled, user-configurable risk detectors that prioritize media, content, and relationships for analyst review – in the context of disinformation campaigns, this could be examples of disinformation content or key influencers spreading manipulative narratives throughout the information environments.

As the digital landscape evolves, analysts must stay vigilant and adapt their strategies accordingly. Integrating OSINT and automated risk detection technologies into investigations provides invaluable insights and enhances the effectiveness of identifying and monitoring disinformation campaigns. By embracing these tools, analysts can better protect individuals and societies from the harmful effects of disinformation.

Learn how advanced data collection and an intuitive, customizable and AI-powered risk detection framework can be applied to your intelligence needs – request a demo below.